Understanding SDLC and STLC

Table of Contents

Understanding STLC (10-30 minutes):

- Test requirements

- Test Plan Components

- Requirement Traceability Matrix

- Test closure report is

- Incident report

Section 2

Understanding STLC (10-30 minutes):

I spent more time talking about the Software Testing Life Cycle methodology in the QA Manual Testing Introduction module. So, hopefully much of that is still with you as we progress onto some additional understanding related to this subject. Of course, as a test analyst the course is primarily intended to prepare you for the real world. You need to be armed with the right amount of knowledge and skills to be comfortable and professional in the test role.

With that in mind let’s discuss some principles regarding STLC. Consider the following principles:

Principles of testing

- Testing shows the presence of defects, not their absence.

- Don’t go into testing with the mindset that you hope you never find defects. Bugs or Bugsy needs to be your middle name. You need to expect that every time you test you will find a bug. The attitude is not about arrogance. No, it is about uncovering issues to improve the application quality. That is what is a part of some test analyst titles – QA which is short for quality assurance. That is what you do as a test analyst.

- Exhaustive testing is impossible.

- You can never do too much testing. Never accept from others a request to quit while you are ahead. If you test and don’t find a bug, test again. Change how you approach. In the past I have gone to the point of doing monkey tests. That might be an exaggeration, but it is the type of testing that means you act like a monkey can act. Launch the application and drop your hands on the keyboard focused on an entry field. You might be surprised at the results. When an application is displaying lengthy text responses, hit the enter key or function keys to see if you can interrupt the display. There are a few tests that take thought. Exhaustive testing is not possible.

- Early testing saves time and money.

- For example, if you discover a requirement defect during requirement review, it can be easily fixed by correcting the error in some document. But imagine the cost if the defect is not caught until acceptance testing. There is cost in time to analyze the defect, cost finding its root cause, cost fixing the defect, cost to acknowledge the fix, cost to run a regression test, and finally cost closeout the bug cycle and make sure all environments have the software updates.

- Defects cluster together.

- It is a fact that a small number of modules include most of the reported flaws and operational failures. And it is typical to find that modules that have the most complex code flow are high on the list. So, with this knowledge test analysts should increase the number of test cases where the software functions are complex.

- Beware of the pesticide paradox.

- Dealing with the pesticide paradox is like the cluster problem. To prevent pesticide paradox, the testing needs to focus on other areas or risks such as looking at the requirements and design documents for defects, write new test cases to test different areas of the system that were not tested previously and write test cases to test different paths or using different test data.

- Testing is context dependent.

- Consider the following thoughts:

- An e-commerce website can require different types of test cases to an API application versus a database reporting application

- Testing of an e-commerce site is different from the testing of an Android application.

- Pay attention to the devices and technologies to settle on the tests

- Absence-of-errors is a fallacy.

- Finding and fixing defects does not help if the application build is unusable

- If the application does not fulfill the user’s needs & requirements, it is still a fallacy

- Consider the following thoughts:

Test Events or Methods

Here is one of the areas of testing that is plagued with a wealth of names to associate with a test event or a method of testing. I want to give you only these two names – event and method. I like these two names because they seem to fit the best. If you understand the meanings the name seems appropriate. An event is an incident in time. A method is process or approach.

So, a list of test methods or events is as follows:

- Unit Tests, 2) Integration Tests. 3) System Tests. 4) Regression Tests. 5) Acceptance Tests.

These tests represent events that define a designation of an activity at some point that is scheduled to be accomplished. These test events are also a process or approach to testing a target application at a predetermined time in the test cycle or development cycle.

Consider the test events as shown in the chart. It provides the WHEN and WHY for these tests.

| Event or Method | Description |

| Unit Test | WHEN: After or during module development PURPOSE: Identify if module is working as expected |

| Integration Test | WHEN: When module functionality is available for email, print, and other services are ready PURPOSE: Ensure the services are operational |

| System Test | WHEN: When one or all functions are ready PURPOSE: Confirm functions are working and find any defects |

| Regression Test | WHEN: As defects are fixed PURPOSE: Confirm that fixes don’t cause other defects |

| Acceptance Test | WHEN: Development turnover to the business PURPOSE: User testing of business scenarios |

| Performance Test | |

| Usability Test | |

| Security Test |

STLC Deliverables

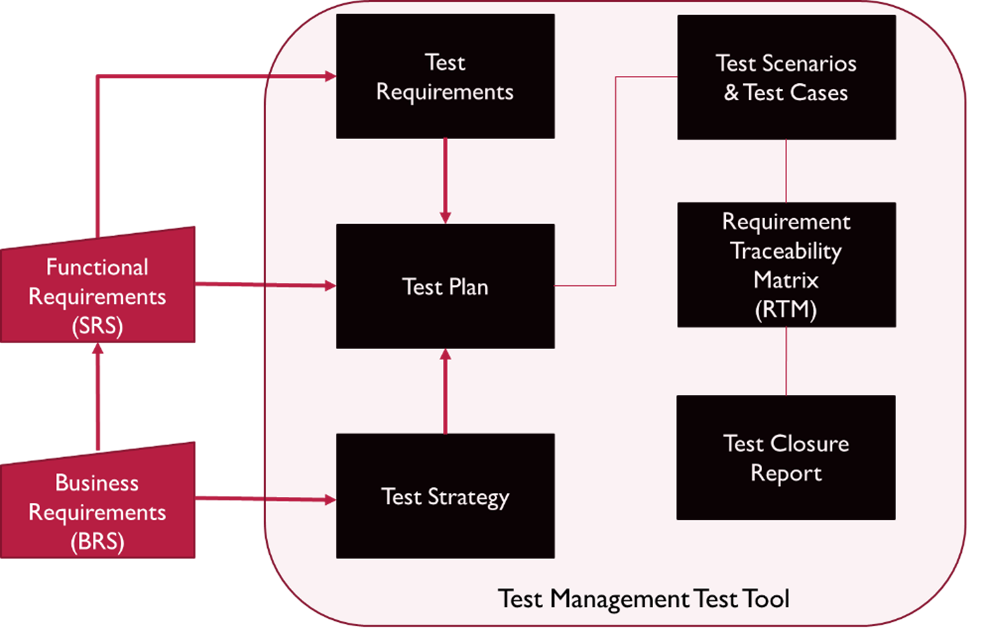

There is a kind of paper trail here. The business requirements usually documented by stakeholders are passed onto the development team as one of the input documents for the project. The project or development team review the business requirement specifications (BRS) and usually transform them into what is known as functional requirements or Software Requirement Specifications (SRS). This process is beneficial to the project as it helps communicate if the project team are clear on their business needs.

To make sure that this is the case, the test team has one more opportunity to test that theory and make it a reality by writing a list of test requirements. Sometimes due to time the test team must take the SRS as-is and treat it as the official test requirements document.

- Test Plan Components

- A test plan includes the test strategies, objectives, schedule, estimations, deadlines, and resources required to complete the test project.

- A test plan is derived from software requirement specifications and test requirements if available.

- Test strategy

- Test strategy is a section of a test plan. The test strategy is derived from business requirement specifications (BRS). It is a description of the overall testing strategy, including the types of tests (the test events) that will be performed.

- The test strategy is a set of instructions or protocols which explain the test design and determine how the test should be performed. It answers the question “What test events will be in-scope?” By default it clarifies what is out-of-scope.

- Test scenario – A Test Scenario is a statement describing the functionality of the application to be tested. It is used for end-to-end testing of a feature and is generally derived from the use cases. A single test scenario can cover one or more test cases.

- Test cases is a way of documenting steps to perform to achieve a test that seeks to confirm expected results. Each test case is associated with an ID, description, instruction steps, and expected results. This usually is a spreadsheet document or a product that supports test management. There is some other information that should be tracked. I like to put it into a separate spreadsheet if I am doing it manually. It is helpful to track status of passed or failed, bug ID if a defect is detected, and actual results to document unexpected results.

- Test data is not always a part of the test plan. But how the test data will be generated should always be documented. It could be from an ETL process, an automated test tool that uses the new application add functions to input data, SQL insert statements can be used to add new data, or a manual approach to add if the new add function is available.

- Requirement Traceability Matrix (RTM) is a document that records the mapping between the high-level requirements and the test cases in the form of a table. That’s how it ensures that the Test Plan covers all the requirements and links to their latest version.

- Test closure report is a report that describes the testing activities performed by a test team. It is created once the testing stage is completed and meets the exit criteria. Test closure reports are usually created by a lead test analyst, and then reviewed by stakeholders.

- Incident report is a document reporting on any event that occurred during the testing which requires further investigation. Sometimes there are issues and defects reported that are outstanding. This means that the items were not processed to completion. If the priority and severity levels are low, these items may be deemed unimportant.

Test Plan Process

Real World Testimonies

I would like to use myself to help you get some idea of what I encountered in the real world taking test jobs. I have worked on numerous small and large development projects. I have been a developer, test analyst, and test lead on projects. As a programmer I have had plenty of exposure to languages and IDE’s. Nowadays I call them test environments because I do so many test activities. Yes, I have had many roles in the test world. The titles are not important. But the roles stretched from test analyst to QA engineer.

I have rarely started on a project on day one. On one project I was hired to oversee the acceptance test project. This was a large SAP EHS implementation project. Something like the Health care case study. EHS stands for Environment, Health, and Safety. All the test analysts, about 30, were employees of the company that were assigned to conduct manual testing and document findings in a test management product called ALM. ALM is a part of my test tool skillset. I was responsible for reporting to the test manager. ALM makes it easy to do management reporting and status monitoring. It’s easy to get spoiled.

On several projects, I was a QA test analyst that was responsible for performance testing SAP ECC applications. Often, I was brought in near the end of the functional testing but before acceptance testing. This type of testing usually lasts two to six months. I have remained on a contract longer by switching to functional test roles when the timing was right. Just like with functional testing, for performance testing you are expected to find something that is not meeting expectations. One is about finding functional defects while the other is about finding performance defects. Of course, getting the defects fixed is the goal.

I also worked 20 years in the middle of my career as an employee who participated in developer, functional, and performance test roles. Sometimes I was part of a team and other times there was no team. Sometimes all the deliverables were expected while other times some deliverables were not required. The size of the project or the number of people involved makes the difference.

Case Study

Unlike the case study I chose for SDLC, the case study I chose for STLC is not what I would consider a finished product. But I believe that it is still a good thing. What will happen is that I will provide you with excerpts of the case study which is not as thorough as the SDLC case study. But then I have made some assumptions that I will use to make the case study more complete. Both case study documents will be available as course assets for you. The company that supplied the QA Team in the case study is called QA Mentor.

Context

The client is an online car dealership. The application they were in the process of developing needed to be stable, yet flexible. It also required integration with multiple third-party products such as MS Office and Adobe, as well as with multiple databases. They needed an experienced QA team to help them perform functional testing and performance testing on all aspects of their complex system with a strong focus on the financial calculations components.

Challenge

The application to be tested needed to support multiple languages, multi-user access, integrate with third party solutions, and be compatible with multiple. The lack of functional specifications and design layout and structure posed a significant barrier for information gathering and planning. The testing involved with this project was to be extremely complex, involving many different integration points and comprising multiple user roles. The development team was also remote, which added communication and coordination issues into the mix.

Solution

QA Mentor immediately set up a process to facilitate effective communication between the offsite teams. After engaging in multiple in-depth meetings with business analysts, developers, and subject matter experts, they were able to put together a proper functional test plan.

Quickly following suit, a performance test plan was started. Research began with additional meetings with database experts, architects, network engineers, and developers. A second QA Mentor team focused solely on the performance test plan while the functional team worked on the complex functional and system testing.

The functional test plan and subsequent execution of the plan involved multi-platform functional testing with an emphasis on financial calculations. QA Mentor utilized their own language experts to assist with the multi-language testing. Multi-user access was also performed, using every member of the functional test team at once. The next phase involved third-party integration tests, including full printing support.

Finally, database integration processes were tested using all known database types and configurations. Over 150 defects were uncovered, and many enhancements were added due to suggestions made by the QA Mentor team.

This is the end of the case study data except for some test strategy data which I will integrate. The case study as written is more like a Test Closure Report. But we can work with that. However, they did mention that there was a lack of functional specifications and design layout and structure. So, I want to approach this as if I found some of that documentation. The only caveat is that it is still not complete on purpose. But it is sufficient to use the input for proceeding further with STLC. The question will be, “How much farther?”.

Here is some additional information to fill in the blanks of the case study. Since this is a training course, the trainer had to step in and see to it that all requirements were defined. And I found some screen flow design. Oops. The trainer found a screen flow design document that should help with test assets.

Business Requirements:

- The online car dealership application should allow customers to search for vehicles based on make, model, year, mileage, and price.

- The application should provide customers with a user-friendly interface that allows them to view detailed information about each vehicle, including photos, specifications, and pricing.

- The application should provide customers with the ability to schedule test drives and reserve vehicles online.

- The application should allow customers to submit financing applications online and receive pre-approval for financing.

- The application should allow customers to submit trade-in requests and receive estimates for their current vehicles.

- The application should allow customers to purchase vehicles online and make payments securely.

- The application should provide customers with the ability to track their vehicle deliveries and receive notifications about the delivery status.

Functional Requirements:

- The application should have a robust search engine that can search through a large inventory of vehicles and return accurate results.

- The application should allow customers to filter search results based on various criteria, such as make, model, year, mileage, and price.

- The application should have a user-friendly interface that provides customers with detailed information about each vehicle, including photos, specifications, and pricing.

- The application should provide customers with the ability to schedule test drives and reserve vehicles online.

- The application should have a secure and user-friendly financing application process that allows customers to apply for financing and receive pre-approval.

- The application should provide customers with a trade-in request process that allows them to submit their current vehicle’s details and receive an estimate.

- The application should have a secure and user-friendly online purchasing process that allows customers to purchase vehicles and make payments.

- The application should provide customers with the ability to track their vehicle deliveries and receive notifications about the delivery status.

- The application should be scalable and able to handle many users and transactions.

- The application should integrate with third-party solutions, such as MS Office and Adobe, and with multiple databases.

The QA Team would do what is called coverage analysis to identify test requirements based on the other requirements partially identified. Knowing that a strategy depends on the functional requirements and the test requirements depend on the business requirements, the QA team can adapt and review all requirements to proceed with a test plan.

Here are just a few examples of test requirements that were developed based on the current business and functional requirements. The QA team would need to develop a comprehensive set of test requirements to ensure that the application is thoroughly tested and meets the client’s expectations.

Of course, the actual screen flow for testing would depend on the specific features and functionality of the application being developed. The QA team would need to work closely with the development team to identify all the key screens and user flows that need to be tested and create test cases to ensure that each feature and function works as expected. This is coverage analysis that leads to a test Requirement Traceability Matrix (RTM) to ensure that the test cases meet or exceed the test requirements.

Assumptions

One more thing. Some screen functionality information was located. A high-level screen flow for testing the online car dealership application:

- Home Page: The home page of the application would provide users with the option to search for vehicles, view featured vehicles, and access other key features of the application, such as Login, Forgot password, Register, financing and trade-in options.

- Search Results Page: When a user initiates a search, they would be taken to a search results page that displays all the vehicles that match their search criteria. This page would include options for filtering and sorting the search results.

- Vehicle Details Page: When a user clicks on a specific vehicle from the search results page, they would be taken to a vehicle details page that displays all the information about that vehicle, including photos, specifications, pricing, and financing options.

- Financing Application Page: If the user chooses to apply for financing, they would be taken to a financing application page where they can enter their personal and financial information and submit the application.

- Trade-In Request Page: If the user chooses to request a trade-in estimate, they would be taken to a trade-in request page where they can enter information about their current vehicle and receive an estimate.

- Checkout Page: When the user is ready to purchase a vehicle, they would be taken to a checkout page where they can review their order, enter payment and shipping information, and complete the transaction.

- User Profile Page: After creating an account, the user would have access to a user profile page where they can view their order history, saved searches, and other relevant information.

- User Registration Page: When a user without an account specifies the Register option on the home page, they are taken to this page. They can enter user profile data and click ADD. The ADD function will display a message indicating success or failure.

- Admin Panel: The admin panel would provide the dealership with access to important data and features, such as inventory management, customer data, and sales reports.

- All pages: Treat all pages with the following common characteristics.

- All pages have Add, Update, Delete, Detail, Return, and Logout buttons. The buttons are active based on the user permissions.

- All pages contain a shopping cart display and link to the Checkout page.

- All pages contain 15 data fields. Five fields (DF1-5) are required entry fields for the add function. Five fields (DF6-10) are inquiry only fields, and five fields (DF11-15) allow update function. The fields are all inquiry for users not logged in.

- The update fields will not display on the page if the user is not logged in or is a customer user logged in.

- The ADD and UPDATE buttons are only active for staff users.

- The DELETE button is only active for Administrator users.

- All buttons are active for staff manager users.

After closing remarks will be the assessments session. This is where we can continue with a discussion as a team and use the case study for taking further STLC steps.

Closing Remarks (10 minutes):

- Key points of the lessons today

- SDLC and STLC complement each other

- You don’t have one without the other

- STLC is a subset of SDLC

- SDLC is the methodology for development activities

- STLC is the methodology for testing activities

- Some of the deliverables are input assets for STLC

- SDLC depends on the business requirements to build functional requirements and executables that function based on user requirements

- STLC depends on both business and functional requirements to build test requirements, and test assets that confirm the application meets business needs

- You are encouraged to continue on the learning journey by exploring additional resources and seeking out opportunities to apply the concepts you have learned. As I mentioned there are sites like FIVERR and UPWORKS that you might find useful getting into some level of test experiences. There are websites that are always looking for usability testers as well. Of course, getting through this course will take you along way toward the goal of being a test analyst with confidence.

Assessment: (1 hour)

Instead of providing a quiz or survey once again I have elected to gauge your understanding of SDLC and STLC, I am experimenting with the idea of emulating the real test world. I want you to use your new skills and ability to apply the concepts to a practical example. In the real world you might be on an assignment as the only QA Analyst or you could be on a team. So, you can choose either scenario. Because of the ZOOM environment we are in we either have to all elect the team approach or all elect the individual approach. I need your feedback to decide the next steps.

Here is my plan for you for the next hour:

- Let’s have an initial discussion as an opportunity to set expectations and start the look and feel of the team setting.

- I hope to increase your understanding and ability to collaborate and problem-solve in a group setting. (Kind of the Star Trek approach.)

- Draft a test plan.

- Draft a test scenario list.

- Draft some test cases for the Login and Security feature.