Introduction to QA Manual Software Test Training Module (Section 2)

Table of Contents

Module 1. Introduction to Software Testing

SDLC and the impact of testing in each stage

Test planning and design process

Ensure Test Objectives are Clear

Test data preparation: Preparation of the test data required for testing

Test case execution and defect management

Module 1. Introduction to Software Testing

Section 2

Overview of the SDLC models

Now let’s look at the models again seeing the different SDLC models and their impact to the testing approaches.

Waterfall Model:

The Waterfall model is a sequential SDLC model, where each stage is completed before moving on to the next. Testing in the Waterfall model is done after each stage is completed. This means that testing is done in a sequential manner, which can result in delays and a higher risk of defects being found late in the development process.

Agile Model:

The Agile model is an iterative SDLC model, where development and testing are done in short cycles. Testing in the Agile model is done continuously throughout the development process, with a focus on early and frequent testing to identify defects and fix the problems. This approach allows for more flexibility and a faster response to changing requirements.

V-Model:

The V-Model is a variation of the Waterfall model, where testing is integrated into each stage of development. Testing in the V-Model is done in parallel with development, with each stage of testing corresponding to a stage of development. This approach ensures that testing is done early and frequently, which can result in better quality software.

Examples

In terms of examples, let’s consider a scenario where a team is developing a mobile app. In the Waterfall model, testing would be done after the design and implementation stages are completed, which could result in defects being found late in the development process. In the Agile model, testing would be done continuously throughout the development process, with a focus on early and frequent testing to identify defects and then make necessary adjustments. In the V-Model, testing would be integrated into each stage of development, with each stage of testing corresponding to a stage of development, which can result in better quality software.

It is important to perform testing at each stage of development to ensure the quality of the software. Different SDLC models have different testing approaches, with the Agile model and V-Model placing a greater emphasis on early and frequent testing, while the Waterfall model takes a more sequential approach. The choice of SDLC model and testing approach should always depend on the specific project requirements and constraints. But in the real world, the choice is a management decision that is dependent on the budget constraints. It is viewed these days that development project costs can be controlled better using Agile SDLC methodology which is rolled out as Kanban or Scrum.

SDLC and the impact of testing in each stage

I mentioned development stages before. Let me mention them again. They are requirements gathering, design, implementation, testing, deployment, and maintenance. We are going to look at each of these in sequence. When the discussion is done, you should understand how testing activities fit into the development stages to help increase software quality.

Requirement Gathering Stage:

In this stage, the software requirements are gathered from the stakeholders, such as customers, business analysts, and end-users. Testing in this stage involves ensuring that the requirements are clear, complete, and testable. It also involves identifying any conflicting or ambiguous requirements that may cause issues during development or testing. For example, if the requirement is to build an e-commerce website, the testing team should verify that all the necessary features, such as search functionality, shopping cart, and payment gateway, are included in the requirement.

This works well when the test team is hired or in-place at this stage. This is not always the case for budget and sourcing reasons. The project may get started on a shoe-string budget focused on gathering requirements and not having the testers ready to begin early test activities.

Design Stage:

In the design stage, the system architecture and design are created based on the requirements gathered in the previous stage. Testing in this stage involves verifying that the design meets the requirements, is scalable, and can be implemented within the given timeframe and budget. For example, the testing team should ensure that the design is responsive and works seamlessly across different devices, such as desktops, laptops, and mobile devices. This is a special demand for the skillset of the testers. It typically requires test engineers at this stage because they should possess the right technology skills and experience of having worked on other projects of a similar nature. Once you have been involved in more than one project, it’s likely you can start on a project at this stage.

Implementation Stage:

In this stage, the software is developed based on the design and requirements. Testing in this stage involves unit testing, integration testing, and system testing. Unit testing involves testing individual software components to ensure they are working as intended. This is testing a class or function from within an IDE using white box testing techniques. We will revisit this later in the course. Then there is Integration testing which involves testing the integration between different components to ensure they work together seamlessly. You may still be in an IDE as your test environment. Finally, there is system testing which involves testing the software system in its entirety to ensure that it meets the requirements and is free from defects. For example, if the software is a financial management system, the testing team should verify that the calculations are accurate, the reports generated are correct, and the system is secure.

Now, you are in a shared test environment setup for emulating the real-world environment. This may be one of several test environments designated to accommodate the software and data for whatever software versions are deployed. And speaking of data, somebody is responsible for the test data loading. Sometimes the developers are responsible. Yet other times the testers are responsible for keeping the data ever green. It is normal for databases to change to accommodate requirement changes or corrections.

Anyway, the test data load or update process may require ETL skills, programming skills, application knowledge, or all the above to complete. I mentioned ETL, which is an acronym for extraction, transformation, and loading. It is possible that other systems are being retired as part of the new development project. If so, other production application data may be captured to load into the new system. This entire process becomes something for testers to test. In addition, there might be the need to write programs that act as utilities to massage data and load it into the test environment. It is also possible that some data can be loaded into the test environment using SQL. SQL is short for structured query language which is a tool for querying and loading databases. As if I had not mentioned enough, as the application development matures it is possible to use functionality of the new application to load new test data.

If you are new to testing, you may be thinking that testing never requires any programming. I spent the first 20 years of my career programming many hours. I spent the next 20 years testing and found myself writing programs just as much. I don’t think that I am the exception. But it will depend on the stage you start a development project. That will determine the scope of your testing activities.

Testing Stage:

In the testing stage, there should be no surprise. The software is thoroughly tested to identify and fix any defects or issues. Testing in this stage involves functional testing, performance testing, security testing, and user acceptance testing (UAT). Functional testing involves testing the software to ensure it meets the specified requirements. Performance testing involves testing the software to ensure it performs as expected under different loads and stress. Security testing involves testing the software to identify and fix any security vulnerabilities. UAT involves testing the software with end-users to ensure it meets their needs and expectations. Sometimes this is a turnover of the test environment for the users or business analysts to conduct their own test activities. But other times it is a team effort where testers and users work together to learn from each other.

For example, if the software is a mobile application, the testing team should verify that the application works on different mobile devices, the response time is acceptable, and the application is easy to use. Then they will engage the users for acceptance review after the teste team demonstrates the application. This allows the users to be the consultants during the process and promotes teamwork.

Deployment Stage:

In deployment stage, the software is ready for release and deployed to the production environment. Testing in this stage involves ensuring that the software works as expected in the production environment and is compatible with other systems and applications. For example, the testing team should verify that the software is compatible with different browsers, operating systems, and database systems if any of those changes.

Deployment may not be a simple process. During the project there may have been many configuration changes and requirements. The production ETL process may have intricate needs to set up the databases. Security requirements may be different from what was in the test environment. And in the process of porting the executables to production things can go wrong. With all this, a test plan is required and necessary to execute.

Maintenance Stage:

In this stage, the software is maintained and updated as needed to ensure it continues to meet the ongoing requirements and performs as expected. Testing in this stage involves regression testing to ensure that the changes made do not affect the existing functionality and performance. For example, if the software is an e-commerce website, the testing team should verify that any updates made, such as changing the payment gateway, do not affect the existing functionality, such as adding items to the cart.

Testing should be performed in each stage of the SDLC to identify and fix any defects or issues. The examples mentioned illustrate the types of testing that should be performed in each stage to ensure that the software meets the requirements and performs as expected.

Test planning and design process

Test planning involves defining the overall testing approach, determining the scope of testing, identifying the testing objectives, defining the test environment, and identifying the test resources. Test design involves developing a comprehensive set of test cases that will effectively and efficiently validate the software requirements.

The test planning process begins with identifying the testing objectives and the scope of testing. The testing objectives should be aligned with the software development project goals, and the scope of testing should be clearly defined based on the requirements of the project. Once the objectives and scope have been defined, the test environment must be established, including hardware, software, and other necessary resources.

As I mentioned earlier, the hardware might first be your own machine as a personal test environment until the development process matures enough. When that happens one or more test environments can be set up. At that point it is necessary to establish protocol for data and software migrations into the test environments.

Next, the testing approach must be developed, which includes identifying the types of testing that will be performed, such as functional testing, performance testing, security testing, and others. The testing approach should be tailored to the specific needs of the project, and the risks associated with each type of testing should be evaluated. Risk assessment is another skill that can be advantageous to testers.

Finally, the test resources must be identified, including the testing team, tools, and processes that will be used to perform the testing. The team should have the appropriate skills and experience, and the tools and processes should be selected based on the specific requirements of the project.

Test Design

Test design involves creating a comprehensive set of test cases that will effectively and efficiently validate the software requirements. The test cases should be designed to test each requirement, including the functional, performance, and security requirements. Even though we are mentioning them together here in the real world they are usually managed by three separate teams.

The test case design process begins with identifying the test objectives for each requirement. The test objectives should be clearly defined and measurable, and the testing approach should be tailored to the specific requirement being tested. Once the objectives have been identified, the test cases can be designed.

Test Objectives

Let’s detail the process or skill regarding defining test objectives for each development requirement. Here’s a general process for defining test objectives:

- Understand the requirement: Before defining the test objectives, it’s important to understand the requirement in detail. This involves analyzing the requirement document or user stories, and discussing with stakeholders to ensure that the requirement is well-understood.

- Identify the acceptance criteria: Acceptance criteria are the conditions that must be met to consider the requirement completed. These criteria may include functional, performance, security, usability, and other aspects. Identifying acceptance criteria helps in defining the test objectives that will ensure that the requirement is met.

- Define the test scenarios: Test scenarios are specific examples or situations that demonstrate the requirement’s behavior. They help in identifying the areas that need to be tested to achieve the acceptance criteria. The test scenarios should cover both positive and negative cases.

- Determine the test objectives: Test objectives are specific goals that the testing team aims to achieve while testing the requirement. They should be specific, measurable, achievable, relevant, and time-bound (SMART). For example, the test objective for a requirement may be to ensure that the user can create a new account with valid credentials and that the account is stored in the database.

- Prioritize the test objectives: Not all test objectives are equally important. Prioritizing the test objectives based on their importance and the risk associated with them helps in ensuring that the most critical aspects of the requirement are tested first.

- Document the test objectives: Finally, document the test objectives in a test plan or a similar document to ensure that they are clearly defined, understood, and tracked throughout the testing process.

By following these steps, you can define clear and specific test objectives for each development requirement, which can help in ensuring that the software meets the acceptance criteria and is of high quality.

Ensure Test Objectives are Clear

To some of us this may be over-kill or a bit too much. I understand. But for some of us defining via creativity is not a natural process. So some of us need help ensuring that the test objectives are clearly defined and measurable. But it is important to ensure that the testing process is effective and efficient. Here are some steps to follow to ensure that the test objectives are clearly defined and measurable:

- Use clear and concise language: When defining the test objectives, use clear and concise language that is easy to understand. Avoid using technical jargon or complex terms that may not be familiar to everyone involved in the testing process.

- Use SMART criteria: Use the SMART criteria to define the test objectives. SMART stands for Specific, Measurable, Achievable, Relevant, and Time-bound. This means that each test objective should be clear, measurable, attainable, relevant to the testing goal, and have a specific deadline.

- Define success criteria: Define success criteria that will indicate whether the test objective has been met or not. For example, if the test objective is to ensure that a login feature is working, the success criteria might be that users can log in successfully without any errors or delays.

- Use metrics to measure progress: Use metrics to track progress towards achieving the test objectives. This could include the number of test cases executed, the percentage of defects found and fixed, and the overall pass rate for each test objective.

- Validate the test objectives: Validate the test objectives with the project stakeholders, including the development team, business analysts, and product owners, to ensure that everyone agrees on the objectives and their expected outcomes.

- Review and refine: Review the test objectives regularly and refine them as needed. If the objectives are not clearly defined or measurable, they may need to be re-worded or split into smaller, more specific objectives.

By following these steps, you can ensure that the test objectives are clearly defined and measurable, and the testing process is effective and efficient.

Test Plan and Planning

Test planning involves defining the overall testing approach, determining the scope of testing, identifying the testing objectives, defining the test environment, and identifying the test resources. Test design involves developing a comprehensive set of test cases that will effectively and efficiently validate the software requirements. Effective test planning and design can help ensure that software products are delivered with the highest quality and meet the requirements of the stakeholders.

What is a Test Plan?

A test plan is a document that outlines the testing approach, objectives, and deliverables for a particular project or software release. It serves as a roadmap for the testing process, providing a clear understanding of what is to be tested, how it will be tested, and what the expected outcomes are.

Why is a Test Plan Important?

A test plan is important for several reasons. Firstly, it ensures that all requirements and features of the software are tested thoroughly, helping to prevent defects and ensure that the software meets the needs of its users. Additionally, a test plan provides a framework for managing the testing process, enabling teams to work more efficiently and effectively. It is possible that the size of a test team and development team should dictate the need for this document. Small teams, under 3 people can be exempt from this plan. But if time permits the document is still useful. Finally, a test plan serves as a critical reference document, providing a record of what was tested and how it was tested for future reference.

What Should Be Included in a Test Plan?

While the specific contents of a test plan may vary depending on the project, there are several key components that should be included:

Introduction

The introduction should provide an overview of the project or software release that is being tested. It should describe the scope of the testing effort and any relevant background information, such as the intended audience for the software or any known issues that need to be addressed.

Testing Approach

The testing approach section should describe the overall testing strategy that will be used for the project. This may include information on the types of testing that will be performed, such as functional, performance, or security testing. It may also include information on any testing tools or methodologies that will be used.

Testing Strategy

A testing strategy is a high-level plan that outlines the approach and methodology for testing a software product or system. It describes the overall testing approach, including the scope of testing, test types, and levels of testing, test automation, and the resources required to perform testing.

The testing strategy should be based on the project requirements, the software development life cycle, and the risks associated with the project. It is typically developed by the testing team in collaboration with the project stakeholders, including the development team, business analysts, and product owners.

The testing strategy should include the following elements:

- Test Objectives: The overall goals of testing, including what the testing is expected to achieve and the outcomes that the testing should produce. This may include objectives such as verifying that all requirements have been met, ensuring that the software is user-friendly, or identifying and fixing defects.

- Test Scope: The features or components of the software product that will be tested, and those that will not be tested. It should include a clear definition of what constitutes the software product, and what its components are.

- Test Types and Levels: The types of tests that will be performed, such as unit testing, integration testing, system testing, acceptance testing, etc. and the level at which they will be performed.

- Test Environment: The hardware, software, and other resources that are required to create the testing environment, including test data and test tools. This may include information on the operating systems, databases, browsers, and other tools that will be used.

- Test Automation: The level of automation that will be used for testing, including tools and frameworks for automating tests.

- Test Metrics and Reporting: The metrics that will be used to measure the effectiveness of the testing, such as defect density, test coverage, and test pass rate, and how the results of the testing will be reported to the project stakeholders. The test reporting should describe how test results will be documented and communicated. This may include information on how defects will be reported, how test summary reports will be produced, and how test results will be shared with stakeholders.

- Test Execution Schedule: The timeline for testing activities, including when each type of test will be performed, how long it will take, and who will be responsible for executing the tests. The test schedule should include key milestones and deadlines. This may include dates for test planning, test case development, test execution, and reporting.

- Test Execution: The test execution should describe how the testing will be performed. This may include information on the specific test cases that will be executed, the order in which they will be executed, and any dependencies or prerequisites that must be in place.

So, the test plan can be considered synonymous with a test strategy. Or you can say that the strategy is a subset of the plan. Top of Form

Test Deliverables

The test deliverables section should list the specific documents, reports, or other materials that will be produced as part of the testing effort. This may include test cases, test scripts, bug reports, and test summary reports.

Test Plan Template

Here is a sample test plan template that you can use as a starting point for your own test plan:

Introduction

- Overview of the project or software release being tested

- Scope of the testing effort

- Relevant background information

Testing Approach

- Description of the overall testing strategy

- Types of testing to be performed

- Testing tools and methodologies to be used

Test Strategy

- Specific goals and objectives of the testing effort

- Verification of requirements

- Usability testing

- Defect identification and resolution

Test Cases and Suites

Test cases should be designed to be repeatable, consistent, and efficient. They should cover all possible scenarios and be designed to uncover defects and errors. That is the key. A test case should have the potential of discovering a problem with the application. Either the test proves the application is working or it proves something needs attention. Each test case should have a clear set of steps that can be followed to execute the test, and the expected results should be clearly defined.

The test cases should also be organized into test suites, with each suite focused on testing a specific requirement or feature. The test suites should be designed to be executed in a logical order, and the test results should be recorded and analyzed to identify defects and errors.

Test case documents usually identify expected results and actual results for each test case. Sometimes it might be better to create two templates for test cases where one references test expectation and the other references actual results. That is not a requirement but may help make the process easier.

An example of test case design might involve testing a login screen for a web application. The test objective might be to verify that the user can successfully log in using a valid username and password. The test case would be designed to cover all possible scenarios, including testing for invalid usernames and passwords, testing for expired passwords, and testing for locked accounts.

The test case would be designed to include clear steps for executing the test, such as entering the username and password, clicking the login button, and verifying that the user is successfully logged in. The expected results would be clearly defined, such as verifying that the user is redirected to the home page of the application.

Test Case Design:

Designing the test cases to ensure that the software is tested thoroughly.

Here is a test case document design that looks like a spreadsheet containing the following column headings: Test case Number, Test Case Name, Test Description, Test Entry Data, Test expectation. This test case example includes Login testing, Account inquiry, and Add Account data.

| Test Case Number | Test Case Name | Test Description | Test Entry Data | Test Expectation |

| 1 | Login Test | Verify user can log in with valid credentials | Username and password | User is successfully logged in |

| 2 | Login Test | Verify user cannot log in with invalid credentials | Invalid username and/or password | User receives an error message |

| 3 | Inquire Account Test | Verify user can successfully inquire about account | Account ID | User is able to view account information |

| 4 | Inquire Account Test | Verify user receives an error message when attempting to inquire about non-existent account | Non-existent account ID | User receives an error message |

| 5 | Add Account Test | Verify user can successfully add a new account | Account details | Account is successfully added |

| 6 | Add Account Test | Verify user receives an error message when attempting to add a duplicate account | Duplicate account details | User receives an error message |

In the above example, the test case document design includes six test cases for three different features: Login, Inquire Account, and Add Account. Each test case is given a unique number and name, with a description of what the test is intended to verify. The test entry data column contains the data that will be used to perform the test, while the test expectation column describes the expected result of the test.

For example, test case 1 (“Login Test”) is designed to verify that a user can log in with valid credentials. The test entry data might include a valid username and password, while the expected result is that the user is successfully logged in. Conversely, test case 2 is designed to verify that the user cannot log in with invalid credentials, such as an incorrect password, and that the user receives an error message.

The other test cases follow a similar format, with each test case designed to validate a particular feature or functionality of the software. By designing test cases in this manner, it becomes easier to perform comprehensive testing and ensure that all the software requirements are met.

Test data preparation: Preparation of the test data required for testing.

Test data preparation is an important aspect of software testing that involves creating or selecting data to be used in testing the software. The test data should be representative of the actual data that the software will process in a production environment. Here are some thoughts that should go into test data preparation requirements:

- Data Relevance: The test data should be relevant to the software functionality that is being tested. It should include both valid and invalid data scenarios, to ensure that the software can handle all possible inputs.

- Data Quantity: The test data should be of sufficient quantity to test the software thoroughly. This includes testing the software under a range of data volumes, including high volume and low volume scenarios.

- Data Variability: The test data should include variations in data, such as different formats, data structures, and data sources. This can help to identify potential issues with data integration and transformation.

- Data Security: The test data should be created or selected in such a way that it does not compromise any sensitive or confidential information. This includes ensuring that the test data does not contain any personally identifiable information (PII) or sensitive financial data.

- Data Complexity: The test data should be complex enough to test the software’s ability to handle complex data scenarios. This includes testing the software under scenarios such as concurrent data access, multiple user access, and load testing.

- Data Management: The test data should be managed in a way that ensures its integrity and accuracy. This includes version control, data backup and restore, and maintaining the data in a secure and accessible location.

- Data Maintenance: The test data should be maintained throughout the testing process. This includes updating the data as the software is modified, ensuring that the test data is kept up to date with any changes to the software or data sources.

By considering these thoughts when preparing test data, the testing team can ensure that the test data is comprehensive, relevant, secure, and properly managed, resulting in more effective testing of the software.

If ETL is involved, how does that impact the thinking concerned with test data preparation?

ETL (Extract, Transform, and Load) is a process used to extract data from various sources, transform the data into a format that can be used by the target system, and load the transformed data into the target system. ETL testing involves testing the entire ETL process to ensure that the data is extracted, transformed, and loaded correctly. Here are some ways that ETL impacts the thinking concerned with test data preparation:

- Data Volume: ETL processes are typically used to process large volumes of data. As a result, the test data should be representative of the actual data volumes that will be processed in the production environment.

- Data Variability: ETL processes often involve transforming data from multiple sources into a common format. The test data should include data from different sources and formats to test the ability of the ETL process to handle such variability.

- Data Quality: The quality of the data is critical to the success of the ETL process. The test data should include a range of data quality scenarios, including both valid and invalid data, to ensure that the ETL process can handle all possible scenarios.

- Data Integration: ETL processes often involve integrating data from different sources. The test data should include scenarios where data from different sources is integrated and transformed to ensure that the ETL process is capable of handling complex data integration scenarios.

- Data Lineage: ETL processes often have complex data lineage, which is the tracking of data from its source to its destination. The test data should include scenarios where the data lineage is complex and the ETL process is capable of tracking data lineage accurately.

- Data Security: The test data should be created or selected in such a way that it does not compromise any sensitive or confidential information. This includes ensuring that the test data does not contain any personally identifiable information (PII) or sensitive financial data.

- Performance Testing: ETL processes involve processing large volumes of data, and the test data should include scenarios where the ETL process is tested for performance, including response times and data processing throughput.

By considering these aspects of ETL testing when preparing test data, the testing team can ensure that the ETL process is capable of processing large volumes of data from multiple sources, transforming the data accurately, and loading it into the target system correctly.

Test case execution and defect management

Test case execution and defect management can be considered related in the sense that part of the process of testing or test execution is the management of defects. When problems are encountered by a tester that starts the defect or bug management process.

If you are new to testing, you might be wondering the origin of “bug”. Well as the story goes, it is from an old Welsh word, bwg (pronounced boog), which means evil spirit or hobgoblin and may also be the origin of boogeyman, the imaginary creature that haunts little children. The first bug was probably the bedbug since it terrorized folks at night by feeding on them. There is also history regarding a woman named Hopper in 1946. When Hopper was released from active duty, she joined the Harvard Faculty at the Computation Laboratory where she continued her work on the military hardware known as Mark II and Mark III. Operators traced an error in the Mark II to a moth trapped in a relay, coining the term “bug”. So, there you have it.

Test case execution is the process of executing the test cases and evaluating the results to determine whether the software meets the specified requirements. This phase of testing is where the rubber meets the road, and where the software is put to the test to see if it meets the customer’s needs.

During Test Execution, the testing team will follow the steps outlined in the test cases and compare the actual results with the expected results. Any deviations from the expected results are documented as defects, which are then tracked and managed through the Defect Management process.

Defect management is an essential part of software testing, and it involves identifying, reporting, tracking, and resolving defects or issues found during testing. Here are some key activities involved in defect management in a test project:

- Defect Identification: This involves identifying defects or issues during testing. Defects can be identified through manual testing, automated testing, or through reviews and inspections. Recording this information may be on a hardcopy document, a spreadsheet like Excel, or a software tool like ALM or JIRA.

- Defect Reporting: Once a defect is identified, it needs to be reported to the development team. The defect report should include details such as the steps to reproduce the issue, the severity and priority of the defect, and any supporting documentation. Once again if a software tool is in use all this information is supported by the tool. And it allows multiple people access to track.

- Defect Tracking: The defect should be tracked throughout the defect management process, which includes assigning a unique identifier to the defect, tracking its status, and assigning it to the appropriate developer for resolution or tester for re-testing to confirm a fixed status..

- Defect Triage: Defect triage involves analyzing and prioritizing defects based on their severity and priority. High severity defects should be prioritized for immediate resolution, while lower severity defects can be addressed later in the development cycle. The triage process is usually done in a meeting setting where developers and testers meet to review the status of outstanding defects.

- Defect Resolution: Once a defect has been assigned to a developer, they should work to resolve the issue. This involves debugging, testing, and making code changes to fix the issue. Once the issue has been resolved, the developer should update the status of the defect. And typically the ongoing triage meeting is the place where the fixed bug is reassigned to the originating tester.

- Defect Verification: After a defect has been resolved, it should be retested to ensure that it has been fixed. The testing team should verify that the defect is no longer present in the software.

- Defect Closure: Once the defect has been verified as resolved, it can be closed in the defect tracking system. The testing team should also update any associated documentation or test cases to reflect the resolution of the defect.

Defect management is an ongoing process throughout the software development lifecycle, and it requires close collaboration between the testing and development teams to ensure that defects are identified, tracked, and resolved effectively.

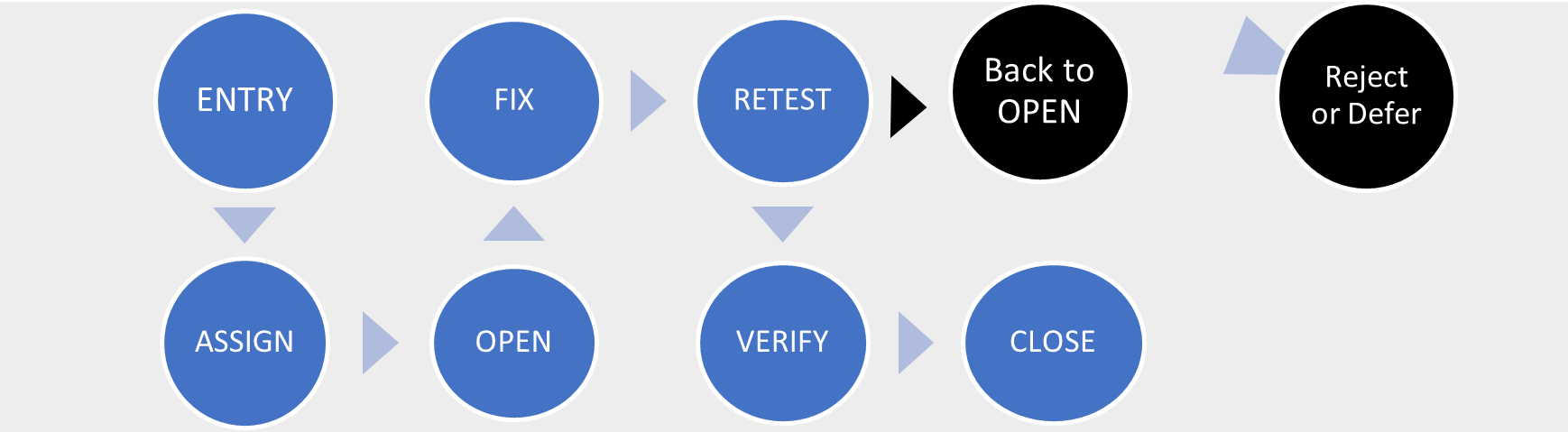

Bug Life Cycle

I refer you to the Bug Life Cycle Flow Diagram. In the diagram you can see nine action steps. These action steps mostly have to do with changing the entry status within the reported bug. However, there is usually some team interaction that occurs that coincides with the status change.

Bug Life Cycle Flow Diagram

The flow diagram is the steps of bug reporting. I am introducing this flow diagram to you, but I am not going into detail on this. There is a separate training module that will do a deep dive on this subject.

The process flow chart is about the process flow. In the process flow steps we have seven identified: Reporting, Reproduction, Analysis, Fix, Test, Verification, and Closure.

| Report: The bug is first reported by a tester, developer, or end-user. |

| Reproduction: The bug is confirmed and reproduced by the development or test team. |

| Analysis: The development team analyzes the bug to determine its cause and the impact it has on the software. |

| Fix: The development team creates a fix for the bug. |

| Test: The fix is tested to ensure that it resolves the bug and does not appear to cause any additional problems. |

| Verification: The fix is verified by the testing team to ensure that it has resolved the bug and does not cause any regressive impacts. |

| Closure: The bug is marked as resolved or closed. It can be marked as deferred if the development team has no fix for the defect or chooses to delay a change. |

Again I only want to expose you to the chart but not get into more detail. Also try not to be confused with the process flow and the bug flow. They are related but not the same.